(from github.com/abolotnov)

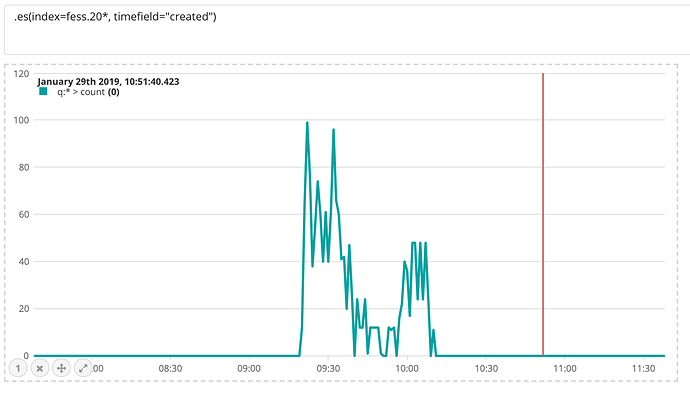

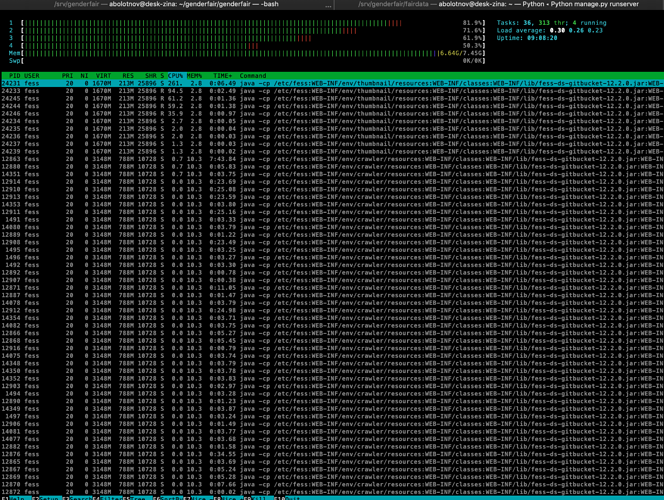

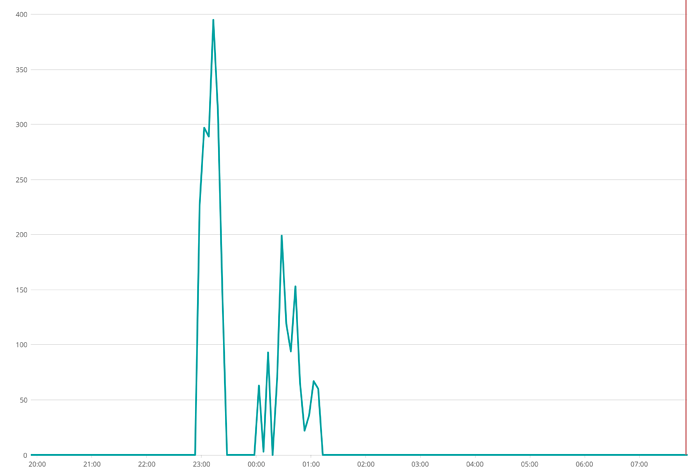

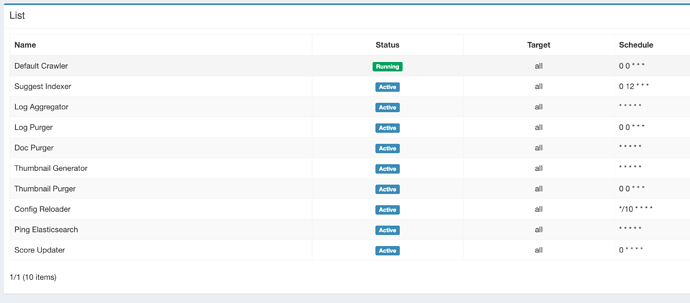

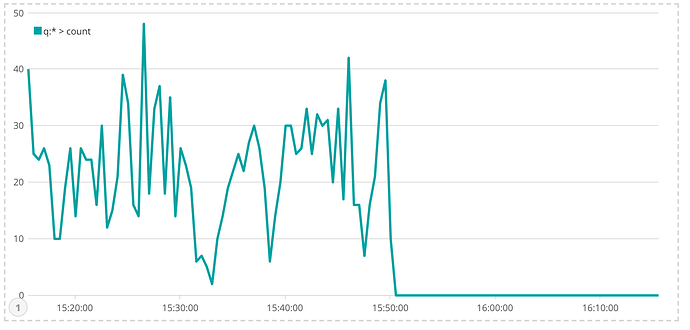

Ok it worked for some time and again stopped collecting documents into the index.

I see a lot of stuff in the logs like this:

2019-01-29 16:41:14,384 [CoreLib-TimeoutManager] DEBUG Closing expired connections

2019-01-29 16:41:14,384 [CoreLib-TimeoutManager] DEBUG Closing connections idle longer than 60000 MILLISECONDS

2019-01-29 16:41:14,384 [CoreLib-TimeoutManager] DEBUG Closing expired connections

2019-01-29 16:41:14,384 [CoreLib-TimeoutManager] DEBUG Closing connections idle longer than 60000 MILLISECONDS

2019-01-29 16:41:14,384 [CoreLib-TimeoutManager] DEBUG Closing expired connections

2019-01-29 16:41:14,384 [CoreLib-TimeoutManager] DEBUG Closing connections idle longer than 60000 MILLISECONDS

2019-01-29 16:41:14,384 [CoreLib-TimeoutManager] DEBUG Closing expired connections

2019-01-29 16:41:14,384 [CoreLib-TimeoutManager] DEBUG Closing connections idle longer than 60000 MILLISECONDS

2019-01-29 16:41:14,426 [Crawler-20190129142043-32-7] DEBUG The url is null. (16074)

2019-01-29 16:41:14,426 [Crawler-20190129142043-169-8] DEBUG The url is null. (12341)

2019-01-29 16:41:14,426 [Crawler-20190129142043-213-5] DEBUG The url is null. (10563)

2019-01-29 16:41:14,426 [Crawler-20190129142043-168-7] DEBUG The url is null. (12360)

2019-01-29 16:41:14,426 [Crawler-20190129142043-43-8] DEBUG The url is null. (15927)

2019-01-29 16:41:14,431 [Crawler-20190129142043-32-1] DEBUG The url is null. (16073)

2019-01-29 16:41:14,431 [Crawler-20190129142043-43-3] DEBUG The url is null. (15928)

2019-01-29 16:41:14,501 [Crawler-20190129142043-168-4] DEBUG The url is null. (12360)

.crawl_queue has over 12K items in it.