(from github.com/abolotnov)

I have around 5K web crawlers configured with max_access = 32. When I start the default crawler, it only seems to to a portion of these - like maybe a 100 and then stops. The only suspicious thing I see in the logs is Future got interrupted. Otherwise it seems to look ok but doesn’t even touch most of the sites.

(from github.com/abolotnov)

Here’s the tail of the log:

2019-01-26 04:39:10,211 [Crawler-20190126043059-89-4] ERROR Crawling Exception at https://www.advanceddisposal.com/for-business/collection-services/recycling.aspx

java.lang.IllegalStateException: Future got interrupted

at org.elasticsearch.common.util.concurrent.FutureUtils.get(FutureUtils.java:82) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.elasticsearch.action.support.AdapterActionFuture.actionGet(AdapterActionFuture.java:54) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.codelibs.fess.crawler.client.EsClient.get(EsClient.java:207) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.service.impl.AbstractCrawlerService.get(AbstractCrawlerService.java:326) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.service.impl.EsDataService.getAccessResult(EsDataService.java:86) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.service.impl.EsDataService.getAccessResult(EsDataService.java:40) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.transformer.FessTransformer.getParentEncoding(FessTransformer.java:258) ~[classes/:?]

at org.codelibs.fess.crawler.transformer.FessTransformer.decodeUrlAsName(FessTransformer.java:231) ~[classes/:?]

at org.codelibs.fess.crawler.transformer.FessTransformer.getFileName(FessTransformer.java:206) ~[classes/:?]

at org.codelibs.fess.crawler.transformer.FessXpathTransformer.putAdditionalData(FessXpathTransformer.java:424) ~[classes/:?]

at org.codelibs.fess.crawler.transformer.FessXpathTransformer.storeData(FessXpathTransformer.java:181) ~[classes/:?]

at org.codelibs.fess.crawler.transformer.impl.HtmlTransformer.transform(HtmlTransformer.java:120) ~[fess-crawler-2.4.4.jar:?]

at org.codelibs.fess.crawler.processor.impl.DefaultResponseProcessor.process(DefaultResponseProcessor.java:77) ~[fess-crawler-2.4.4.jar:?]

at org.codelibs.fess.crawler.CrawlerThread.processResponse(CrawlerThread.java:330) [fess-crawler-2.4.4.jar:?]

at org.codelibs.fess.crawler.FessCrawlerThread.processResponse(FessCrawlerThread.java:240) ~[classes/:?]

at org.codelibs.fess.crawler.CrawlerThread.run(CrawlerThread.java:176) [fess-crawler-2.4.4.jar:?]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_191]

Caused by: java.lang.InterruptedException

at java.util.concurrent.locks.AbstractQueuedSynchronizer.tryAcquireSharedNanos(AbstractQueuedSynchronizer.java:1326) ~[?:1.8.0_191]

at org.elasticsearch.common.util.concurrent.BaseFuture$Sync.get(BaseFuture.java:234) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.elasticsearch.common.util.concurrent.BaseFuture.get(BaseFuture.java:69) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.elasticsearch.common.util.concurrent.FutureUtils.get(FutureUtils.java:77) ~[elasticsearch-6.5.4.jar:6.5.4]

... 16 more

2019-01-26 04:39:10,222 [WebFsCrawler] INFO [EXEC TIME] crawling time: 484436ms

2019-01-26 04:39:10,222 [main] INFO Finished Crawler

2019-01-26 04:39:10,254 [main] INFO [CRAWL INFO] DataCrawlEndTime=2019-01-26T04:31:05.742+0000,CrawlerEndTime=2019-01-26T04:39:10.223+0000,WebFsCrawlExecTime=484436,CrawlerStatus=true,CrawlerStartTime=2019-01-26T04:31:05.704+0000,WebFsCrawlEndTime=2019-01-26T04:39:10.222+0000,WebFsIndexExecTime=329841,WebFsIndexSize=1738,CrawlerExecTime=484519,DataCrawlStartTime=2019-01-26T04:31:05.729+0000,WebFsCrawlStartTime=2019-01-26T04:31:05.729+0000

2019-01-26 04:39:42,416 [main] INFO Disconnected to elasticsearch:localhost:9300

2019-01-26 04:39:42,418 [Crawler-20190126043059-4-12] INFO I/O exception (java.net.SocketException) caught when processing request to {}->http://www.americanaddictioncenters.com:80: Socket closed

2019-01-26 04:39:42,418 [Crawler-20190126043059-4-12] INFO Retrying request to {}->http://www.americanaddictioncenters.com:80

2019-01-26 04:39:42,418 [Crawler-20190126043059-33-1] INFO I/O exception (java.net.SocketException) caught when processing request to {}->http://www.arborrealtytrust.com:80: Socket closed

2019-01-26 04:39:42,418 [Crawler-20190126043059-33-1] INFO Retrying request to {}->http://www.arborrealtytrust.com:80

2019-01-26 04:39:42,419 [Crawler-20190126043059-4-12] INFO Could not process http://www.americanaddictioncenters.com/robots.txt. Connection pool shut down

2019-01-26 04:39:42,419 [Crawler-20190126043059-33-1] INFO Could not process http://www.arborrealtytrust.com/robots.txt. Connection pool shut down

2019-01-26 04:39:43,414 [main] INFO Destroyed LaContainer.

(from github.com/marevol)

Why did you set max_access = 32?

and check elasticsearch log file.

(from github.com/abolotnov)

I don’t want to store too many pages per site at this point - just want to check how many are going to be accessible. Elasticsearch logs seem to be ok. Should I look for something? I don’t see anything other than some exceptions that I trigger while trying to graph things in kibana. When I restart the default crawler job, it will work and through in additional documents. But it seems to start with the same crawlers so not much new documents added to index.

(from github.com/abolotnov)

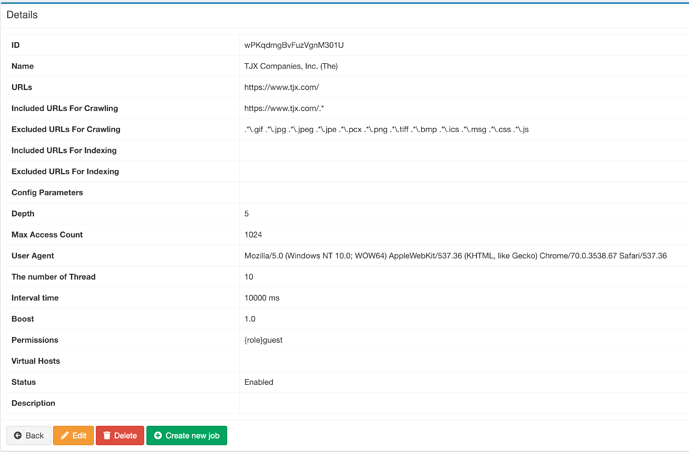

Here’s a sample of my crawler config:

{

"name": "AMZN",

"urls": "http://www.amazon.com/

https://www.amazon.com/",

"included_urls": "http://www.amazon.com/.*

https://www.amazon.com/.*",

"excluded_urls": ".*\\.gif .*\\.jpg .*\\.jpeg .*\\.jpe .*\\.pcx .*\\.png .*\\.tiff .*\\.bmp .*\\.ics .*\\.msg .*\\.css .*\\.js",

"user_agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.67 Safari/537.36",

"num_of_thread": 16,

"interval_time": 10,

"sort_order": 1,

"boost": 1.0,

"available": "True",

"permissions": "{role}guest",

"depth": 5,

"max_access_count": 32

}

(from github.com/abolotnov)

After I started default crawler again it worked for some time and ended:

2019-01-26 05:07:45,813 [Crawler-20190126045933-89-7] ERROR Crawling Exception at https://www.advanceddisposal.com/for-business/disposal-recycling-services/special-waste/blackfoot-landfill-winslow,-in.aspx

java.lang.IllegalStateException: Future got interrupted

at org.elasticsearch.common.util.concurrent.FutureUtils.get(FutureUtils.java:82) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.elasticsearch.action.support.AdapterActionFuture.actionGet(AdapterActionFuture.java:54) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.codelibs.fess.crawler.client.EsClient.get(EsClient.java:207) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.service.impl.AbstractCrawlerService.get(AbstractCrawlerService.java:326) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.service.impl.EsDataService.getAccessResult(EsDataService.java:86) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.service.impl.EsDataService.getAccessResult(EsDataService.java:40) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.transformer.FessTransformer.getParentEncoding(FessTransformer.java:258) ~[classes/:?]

at org.codelibs.fess.crawler.transformer.FessTransformer.decodeUrlAsName(FessTransformer.java:231) ~[classes/:?]

at org.codelibs.fess.crawler.transformer.FessTransformer.getFileName(FessTransformer.java:206) ~[classes/:?]

at org.codelibs.fess.crawler.transformer.FessXpathTransformer.putAdditionalData(FessXpathTransformer.java:424) ~[classes/:?]

at org.codelibs.fess.crawler.transformer.FessXpathTransformer.storeData(FessXpathTransformer.java:181) ~[classes/:?]

at org.codelibs.fess.crawler.transformer.impl.HtmlTransformer.transform(HtmlTransformer.java:120) ~[fess-crawler-2.4.4.jar:?]

at org.codelibs.fess.crawler.processor.impl.DefaultResponseProcessor.process(DefaultResponseProcessor.java:77) ~[fess-crawler-2.4.4.jar:?]

at org.codelibs.fess.crawler.CrawlerThread.processResponse(CrawlerThread.java:330) [fess-crawler-2.4.4.jar:?]

at org.codelibs.fess.crawler.FessCrawlerThread.processResponse(FessCrawlerThread.java:240) ~[classes/:?]

at org.codelibs.fess.crawler.CrawlerThread.run(CrawlerThread.java:176) [fess-crawler-2.4.4.jar:?]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_191]

Caused by: java.lang.InterruptedException

at java.util.concurrent.locks.AbstractQueuedSynchronizer.tryAcquireSharedNanos(AbstractQueuedSynchronizer.java:1326) ~[?:1.8.0_191]

at org.elasticsearch.common.util.concurrent.BaseFuture$Sync.get(BaseFuture.java:234) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.elasticsearch.common.util.concurrent.BaseFuture.get(BaseFuture.java:69) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.elasticsearch.common.util.concurrent.FutureUtils.get(FutureUtils.java:77) ~[elasticsearch-6.5.4.jar:6.5.4]

... 16 more

2019-01-26 05:07:46,306 [WebFsCrawler] INFO [EXEC TIME] crawling time: 487118ms

2019-01-26 05:07:46,307 [main] INFO Finished Crawler

2019-01-26 05:07:46,327 [main] INFO [CRAWL INFO] DataCrawlEndTime=2019-01-26T04:59:39.151+0000,CrawlerEndTime=2019-01-26T05:07:46.307+0000,WebFsCrawlExecTime=487118,CrawlerStatus=true,CrawlerStartTime=2019-01-26T04:59:39.114+0000,WebFsCrawlEndTime=2019-01-26T05:07:46.306+0000,WebFsIndexExecTime=320871,WebFsIndexSize=1482,CrawlerExecTime=487193,DataCrawlStartTime=2019-01-26T04:59:39.136+0000,WebFsCrawlStartTime=2019-01-26T04:59:39.135+0000

2019-01-26 05:08:28,267 [main] INFO Disconnected to elasticsearch:localhost:9300

2019-01-26 05:08:28,268 [Crawler-20190126045933-4-1] INFO I/O exception (java.net.SocketException) caught when processing request to {}->http://www.americanaddictioncenters.com:80: Socket closed

2019-01-26 05:08:28,268 [Crawler-20190126045933-4-1] INFO Retrying request to {}->http://www.americanaddictioncenters.com:80

2019-01-26 05:08:28,268 [Crawler-20190126045933-33-1] INFO I/O exception (java.net.SocketException) caught when processing request to {}->http://www.arborrealtytrust.com:80: Socket closed

2019-01-26 05:08:28,268 [Crawler-20190126045933-33-1] INFO Retrying request to {}->http://www.arborrealtytrust.com:80

2019-01-26 05:08:28,268 [Crawler-20190126045933-33-1] INFO Could not process http://www.arborrealtytrust.com/robots.txt. Connection pool shut down

2019-01-26 05:08:28,268 [Crawler-20190126045933-4-1] INFO Could not process http://www.americanaddictioncenters.com/robots.txt. Connection pool shut down

2019-01-26 05:08:29,264 [main] INFO Destroyed LaContainer.

(from github.com/abolotnov)

I see many exceptions of this sort:

2019-01-26 05:07:31,313 [Crawler-20190126045933-88-12] ERROR Crawling Exception at https://www.autodesk.com/sitemap-autodesk-dotcom.xml

org.codelibs.fess.crawler.exception.EsAccessException: Failed to insert [UrlQueueImpl [id=20190126045933-88.aHR0cHM6Ly93d3cuYXV0b2Rlc2suY29tL2NhbXBhaWducy9hZHZhbmNlc3RlZWwvbGVhcm5pbmctY2VudGVy, sessionId=20190126045933-88, method=GET, url=https://www.autodesk.com/campaigns/advancesteel/learning-center, encoding=null, parentUrl=https://www.autodesk.com/sitemap-autodesk-dotcom.xml, depth=3, lastModified=null, createTime=1548479224487], UrlQueueImpl [id=20190126045933-88.aHR0cHM6Ly93d3cuYXV0b2Rlc2suY29tL2NhbXBhaWducy9hZHZhbmNlc3RlZWwvd2hpdGVwYXBlcg, sessionId=20190126045933-88, method=GET, url=https://www.autodesk.com/campaigns/advancesteel/whitepaper, encoding=null, parentUrl=https://www.autodesk.com/sitemap-autodesk-dotcom.xml, depth=3, lastModified=null, createTime=1548479224487], UrlQueueImpl [id=20190126045933-88.aHR0cHM6Ly93d3cuYXV0b2Rlc2suY29tL2NhbXBhaWducy9hZHZhbmNlLXN0ZWVsLWluLXRyaWFsLW1hcmtldGluZy9jb250YWN0LW1l, sessionId=20190126045933-88, method=GET, url=https://www.autodesk.com/campaigns/advance-steel-in-trial-marketing/contact-me, encoding=null, parentUrl=https://www.autodesk.com/sitemap-autodesk-dotcom.xml, depth=3, lastModified=null, createTime=1548479224487], UrlQueueImpl [id=20190126045933-88.aHR0cHM6Ly93d3cuYXV0b2Rlc2suY29tL2NhbXBhaWducy9hZHZhbmNlLXN0ZWVsLXBsYW50LWtvLWNvbnRhY3QtbWU, sessionId=20190126045933-88, method=GET, url=https://www.autodesk.com/campaigns/advance-steel-plant-ko-contact-me, encoding=null, parentUrl=https://www.autodesk.com/sitemap-autodesk-dotcom.xml, depth=3, lastModified=null, createTime=1548479224487], UrlQueueImpl [id=20190126045933-88.aHR0cHM6Ly93d3cuYXV0b2Rlc2suY29tL2NhbXBhaWducy9hZHZhbmNlLXN0ZWVsLXRyaWFs, sessionId=20190126045933-88, method=GET, url=https://www.autodesk.com/campaigns/advance-steel-trial, encoding=null, parentUrl=https://www.autodesk.com/sitemap-autodesk-dotcom.xml, depth=3, lastModified=null, createTime=1548479224487], UrlQueueImpl [id=20190126045933-88.aHR0cHM6Ly93d3cuYXV0b2Rlc2suY29tL2NhbXBhaWducy9hZWMtY2xvdWQtY29ubmVjdGVkLXByb21vdGlvbg, sessionId=20190126045933-88, method=GET, url=https://www.autodesk.com/campaigns/aec-cloud-connected-promotion, encoding=null, parentUrl=https://www.autodesk.com/sitemap-autodesk-dotcom.xml, depth=3, lastModified=null, createTime=1548479224487], UrlQueueImpl [id=20190126045933-88.aHR0cHM6Ly93d3cuYXV0b2Rlc2suY29tL2NhbXBhaWducy9hZWMtY29sbGFib3JhdGlvbi1wcm9tb3Rpb24, sessionId=20190126045933-88, method=GET, url=https://www.autodesk.com/campaigns/aec-collaboration-promotion, encoding=null, parentUrl=https://www.autodesk.com/sitemap-autodesk-dotcom.xml, depth=3, lastModified=null, createTime=1548479224487], UrlQueueImpl [id=20190126045933-88.aHR0cHM6Ly93d3cuYXV0b2Rlc2suY29tL2NhbXBhaWducy9hZWMtY29sbGFib3JhdGlvbi1wcm9tb3Rpb24tdGNz, sessionId=20190126045933-88, method=GET, url=https://www.autodesk.com/campaigns/aec-collaboration-promotion-tcs, encoding=null, parentUrl=https://www.autodesk.com/sitemap-autodesk-dotcom.xml, depth=3, lastModified=null, createTime=1548479224487], UrlQueueImpl [id=20190126045933-88.aHR0cHM6Ly93d3cuYXV0b2Rlc2suY29tL2NhbXBhaWducy9hZWMtY29sbGVjdGlvbi1jb250YWN0LW1lLWVuLXVz, sessionId=20190126045933-88, method=GET, url=https://www.autodesk.com/campaigns/aec-collection-contact-me-en-us, encoding=null, parentUrl=https://www.autodesk.com/sitemap-autodesk-dotcom.xml, depth=3, lastModified=null, createTime=1548479224487], UrlQueueImpl [id=20190126045933-88.aHR0cHM6Ly93d3cuYXV0b2Rlc2suY29tL2NhbXBhaWducy9hZWMtY29sbGVjdGlvbi1tdWx0aS11c2Vy, sessionId=20190126045933-88, method=GET, url=https://www.autodesk.com/campaigns/aec-collection-multi-user, encoding=null, parentUrl=https://www.autodesk.com/sitemap-autodesk-dotcom.xml, depth=3, lastModified=null, createTime=1548479224487]]

at org.codelibs.fess.crawler.service.impl.AbstractCrawlerService.doInsertAll(AbstractCrawlerService.java:301) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.service.impl.AbstractCrawlerService.lambda$insertAll$6(AbstractCrawlerService.java:243) ~[fess-crawler-es-2.4.4.jar:?]

at java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1382) ~[?:1.8.0_191]

at java.util.stream.ReferencePipeline$Head.forEach(ReferencePipeline.java:580) ~[?:1.8.0_191]

at org.codelibs.fess.crawler.service.impl.AbstractCrawlerService.insertAll(AbstractCrawlerService.java:240) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.service.impl.EsUrlQueueService.offerAll(EsUrlQueueService.java:179) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.CrawlerThread.storeChildUrls(CrawlerThread.java:357) [fess-crawler-2.4.4.jar:?]

at org.codelibs.fess.crawler.CrawlerThread.run(CrawlerThread.java:196) [fess-crawler-2.4.4.jar:?]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_191]

Caused by: java.lang.IllegalStateException: Future got interrupted

at org.elasticsearch.common.util.concurrent.FutureUtils.get(FutureUtils.java:82) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.elasticsearch.action.support.AdapterActionFuture.actionGet(AdapterActionFuture.java:54) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.codelibs.fess.crawler.client.EsClient.get(EsClient.java:207) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.service.impl.AbstractCrawlerService.doInsertAll(AbstractCrawlerService.java:288) ~[fess-crawler-es-2.4.4.jar:?]

... 8 more

Caused by: java.lang.InterruptedException

at java.util.concurrent.locks.AbstractQueuedSynchronizer.doAcquireSharedNanos(AbstractQueuedSynchronizer.java:1039) ~[?:1.8.0_191]

at java.util.concurrent.locks.AbstractQueuedSynchronizer.tryAcquireSharedNanos(AbstractQueuedSynchronizer.java:1328) ~[?:1.8.0_191]

at org.elasticsearch.common.util.concurrent.BaseFuture$Sync.get(BaseFuture.java:234) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.elasticsearch.common.util.concurrent.BaseFuture.get(BaseFuture.java:69) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.elasticsearch.common.util.concurrent.FutureUtils.get(FutureUtils.java:77) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.elasticsearch.action.support.AdapterActionFuture.actionGet(AdapterActionFuture.java:54) ~[elasticsearch-6.5.4.jar:6.5.4]

at org.codelibs.fess.crawler.client.EsClient.get(EsClient.java:207) ~[fess-crawler-es-2.4.4.jar:?]

at org.codelibs.fess.crawler.service.impl.AbstractCrawlerService.doInsertAll(AbstractCrawlerService.java:288) ~[fess-crawler-es-2.4.4.jar:?]

... 8 more

Does it have something to do with the problem?

(from github.com/marevol)

Did you check elasticsearch log file?

(from github.com/abolotnov)

yes, I don’t see anything bad in it:

lots of this mostly

[2019-01-26T04:01:26,239][INFO ][o.c.e.f.i.a.JapaneseKatakanaStemmerFactory] [t8cOK8R] [.fess_config.access_token] org.codelibs.elasticsearch.extension.analysis.KuromojiKatakanaStemmerFactory is found.

[2019-01-26T04:01:26,248][INFO ][o.c.e.f.i.a.JapanesePartOfSpeechFilterFactory] [t8cOK8R] [.fess_config.access_token] org.codelibs.elasticsearch.extension.analysis.KuromojiPartOfSpeechFilterFactory is found.

[2019-01-26T04:01:26,256][INFO ][o.c.e.f.i.a.JapaneseReadingFormFilterFactory] [t8cOK8R] [.fess_config.access_token] org.codelibs.elasticsearch.extension.analysis.KuromojiReadingFormFilterFactory is found.

[2019-01-26T04:01:26,259][INFO ][o.e.c.m.MetaDataMappingService] [t8cOK8R] [.fess_config.access_token/o-csvKs0SMyFvBIr2_wCEA] create_mapping [access_token]

[2019-01-26T04:01:26,400][INFO ][o.c.e.f.i.a.JapaneseIterationMarkCharFilterFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiIterationMarkCharFilterFactory is found.

[2019-01-26T04:01:26,408][INFO ][o.c.e.f.i.a.JapaneseTokenizerFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.ReloadableKuromojiTokenizerFactory is found.

[2019-01-26T04:01:26,417][INFO ][o.c.e.f.i.a.ReloadableJapaneseTokenizerFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.ReloadableKuromojiTokenizerFactory is found.

[2019-01-26T04:01:26,439][INFO ][o.c.e.f.i.a.JapaneseBaseFormFilterFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiBaseFormFilterFactory is found.

[2019-01-26T04:01:26,447][INFO ][o.c.e.f.i.a.JapaneseKatakanaStemmerFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiKatakanaStemmerFactory is found.

[2019-01-26T04:01:26,456][INFO ][o.c.e.f.i.a.JapanesePartOfSpeechFilterFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiPartOfSpeechFilterFactory is found.

[2019-01-26T04:01:26,465][INFO ][o.c.e.f.i.a.JapaneseReadingFormFilterFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiReadingFormFilterFactory is found.

[2019-01-26T04:01:26,466][INFO ][o.e.c.m.MetaDataCreateIndexService] [t8cOK8R] [.fess_config.bad_word] creating index, cause [api], templates [], shards [1]/[0], mappings []

[2019-01-26T04:01:26,482][INFO ][o.c.e.f.i.a.JapaneseIterationMarkCharFilterFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiIterationMarkCharFilterFactory is found.

[2019-01-26T04:01:26,491][INFO ][o.c.e.f.i.a.JapaneseTokenizerFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.ReloadableKuromojiTokenizerFactory is found.

[2019-01-26T04:01:26,499][INFO ][o.c.e.f.i.a.ReloadableJapaneseTokenizerFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.ReloadableKuromojiTokenizerFactory is found.

[2019-01-26T04:01:26,521][INFO ][o.c.e.f.i.a.JapaneseBaseFormFilterFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiBaseFormFilterFactory is found.

[2019-01-26T04:01:26,529][INFO ][o.c.e.f.i.a.JapaneseKatakanaStemmerFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiKatakanaStemmerFactory is found.

[2019-01-26T04:01:26,538][INFO ][o.c.e.f.i.a.JapanesePartOfSpeechFilterFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiPartOfSpeechFilterFactory is found.

[2019-01-26T04:01:26,546][INFO ][o.c.e.f.i.a.JapaneseReadingFormFilterFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiReadingFormFilterFactory is found.

[2019-01-26T04:01:26,588][INFO ][o.e.c.r.a.AllocationService] [t8cOK8R] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[.fess_config.bad_word][0]] ...]).

[2019-01-26T04:01:26,635][INFO ][o.c.e.f.i.a.JapaneseIterationMarkCharFilterFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiIterationMarkCharFilterFactory is found.

[2019-01-26T04:01:26,643][INFO ][o.c.e.f.i.a.JapaneseTokenizerFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.ReloadableKuromojiTokenizerFactory is found.

[2019-01-26T04:01:26,651][INFO ][o.c.e.f.i.a.ReloadableJapaneseTokenizerFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.ReloadableKuromojiTokenizerFactory is found.

[2019-01-26T04:01:26,672][INFO ][o.c.e.f.i.a.JapaneseBaseFormFilterFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiBaseFormFilterFactory is found.

[2019-01-26T04:01:26,681][INFO ][o.c.e.f.i.a.JapaneseKatakanaStemmerFactory] [t8cOK8R] [.fess_config.bad_word] org.codelibs.elasticsearch.extension.analysis.KuromojiKatakanaStemmerFactory is found.

(from github.com/marevol)

It seems to be a logging problem…

I’ll improve it in a future release.

(from github.com/abolotnov)

also this is another scenario: the default crawler is running but not really capturing real pages and stuff: just kind of reports the system info and processing no docs and that’s all.

2019-01-26 06:47:44,194 [Crawler-20190126061518-99-2] INFO Crawling URL: https://www.ameren.com/business-partners/account-data-management

2019-01-26 06:47:44,414 [Crawler-20190126061518-99-2] INFO Crawling URL: https://www.ameren.com/business-partners/real-estate

2019-01-26 06:47:44,656 [Crawler-20190126061518-99-2] INFO Crawling URL: https://www.ameren.com/sustainability/employee-sustainability

2019-01-26 06:47:45,976 [Crawler-20190126061518-99-4] INFO META(robots=noindex,nofollow): https://www.ameren.com/account/forgot-userid

2019-01-26 06:47:47,615 [IndexUpdater] INFO Processing 20/31 docs (Doc:{access 7ms}, Mem:{used 1GB, heap 1GB, max 31GB})

2019-01-26 06:47:47,700 [IndexUpdater] INFO Processing 1/11 docs (Doc:{access 3ms, cleanup 30ms}, Mem:{used 1GB, heap 1GB, max 31GB})

2019-01-26 06:47:47,717 [IndexUpdater] INFO Processing 0/10 docs (Doc:{access 4ms, cleanup 10ms}, Mem:{used 1GB, heap 1GB, max 31GB})

2019-01-26 06:47:52,720 [IndexUpdater] INFO Processing 10/10 docs (Doc:{access 3ms}, Mem:{used 1GB, heap 1GB, max 31GB})

2019-01-26 06:47:52,765 [IndexUpdater] INFO Processing no docs (Doc:{access 2ms, cleanup 17ms}, Mem:{used 1GB, heap 1GB, max 31GB})

2019-01-26 06:47:52,920 [IndexUpdater] INFO Sent 32 docs (Doc:{process 84ms, send 155ms, size 1MB}, Mem:{used 1GB, heap 1GB, max 31GB})

2019-01-26 06:47:52,922 [IndexUpdater] INFO Processing no docs (Doc:{access 2ms, cleanup 17ms}, Mem:{used 1GB, heap 1GB, max 31GB})

2019-01-26 06:47:52,949 [IndexUpdater] INFO Deleted completed document data. The execution time is 27ms.

2019-01-26 06:47:56,313 [Crawler-20190126061518-100-1] INFO Crawling URL: http://www.aberdeench.com/

2019-01-26 06:47:56,316 [Crawler-20190126061518-100-1] INFO Checking URL: http://www.aberdeench.com/robots.txt

2019-01-26 06:47:57,132 [Crawler-20190126061518-100-1] INFO Redirect to URL: http://www.aberdeenaef.com/

2019-01-26 06:48:02,921 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 1GB, heap 1GB, max 31GB})

2019-01-26 06:48:07,813 [Crawler-20190126061518-4-1] INFO I/O exception (java.net.SocketException) caught when processing request to {}->http://www.americanaddictioncenters.com:80: Connection timed out (Read failed)

2019-01-26 06:48:07,813 [Crawler-20190126061518-4-1] INFO Retrying request to {}->http://www.americanaddictioncenters.com:80

2019-01-26 06:48:12,922 [IndexUpdater] INFO Processing no docs (Doc:{access 2ms, cleanup 17ms}, Mem:{used 791MB, heap 1GB, max 31GB})

2019-01-26 06:48:22,922 [IndexUpdater] INFO Processing no docs (Doc:{access 2ms, cleanup 17ms}, Mem:{used 792MB, heap 1GB, max 31GB})

2019-01-26 06:48:22,933 [IndexUpdater] INFO Deleted completed document data. The execution time is 11ms.

2019-01-26 06:48:25,904 [CoreLib-TimeoutManager] INFO [SYSTEM MONITOR] {"os":{"memory":{"physical":{"free":82318585856,"total":128920035328},"swap_space":{"free":0,"total":0}},"cpu":{"percent":1},"load_averages":[1.08, 2.16, 2.67]},"process":{"file_descriptor":{"open":477,"max":65535},"cpu":{"percent":0,"total":314320},"virtual_memory":{"total":44742717440}},"jvm":{"memory":{"heap":{"used":832015712,"committed":1948188672,"max":34246361088,"percent":2},"non_heap":{"used":136460680,"committed":144531456}},"pools":{"direct":{"count":99,"used":541081601,"capacity":541081600},"mapped":{"count":0,"used":0,"capacity":0}},"gc":{"young":{"count":88,"time":1038},"old":{"count":2,"time":157}},"threads":{"count":104,"peak":112},"classes":{"loaded":13391,"total_loaded":13525,"unloaded":134},"uptime":1987680},"elasticsearch":null,"timestamp":1548485305904}

2019-01-26 06:48:32,921 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 795MB, heap 1GB, max 31GB})

2019-01-26 06:48:42,922 [IndexUpdater] INFO Processing no docs (Doc:{access 2ms, cleanup 17ms}, Mem:{used 797MB, heap 1GB, max 31GB})

2019-01-26 06:48:52,921 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 799MB, heap 1GB, max 31GB})

2019-01-26 06:49:02,922 [IndexUpdater] INFO Processing no docs (Doc:{access 2ms, cleanup 17ms}, Mem:{used 800MB, heap 1GB, max 31GB})

2019-01-26 06:49:12,921 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 802MB, heap 1GB, max 31GB})

2019-01-26 06:49:22,922 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 804MB, heap 1GB, max 31GB})

2019-01-26 06:49:25,954 [CoreLib-TimeoutManager] INFO [SYSTEM MONITOR] {"os":{"memory":{"physical":{"free":82327228416,"total":128920035328},"swap_space":{"free":0,"total":0}},"cpu":{"percent":0},"load_averages":[0.4, 1.77, 2.5]},"process":{"file_descriptor":{"open":464,"max":65535},"cpu":{"percent":0,"total":315460},"virtual_memory":{"total":44742717440}},"jvm":{"memory":{"heap":{"used":844083448,"committed":1948188672,"max":34246361088,"percent":2},"non_heap":{"used":136528264,"committed":144662528}},"pools":{"direct":{"count":99,"used":541081601,"capacity":541081600},"mapped":{"count":0,"used":0,"capacity":0}},"gc":{"young":{"count":88,"time":1038},"old":{"count":2,"time":157}},"threads":{"count":104,"peak":112},"classes":{"loaded":13391,"total_loaded":13525,"unloaded":134},"uptime":2047731},"elasticsearch":null,"timestamp":1548485365953}

2019-01-26 06:49:32,922 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 806MB, heap 1GB, max 31GB})

2019-01-26 06:49:42,923 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 808MB, heap 1GB, max 31GB})

2019-01-26 06:49:52,924 [IndexUpdater] INFO Processing no docs (Doc:{access 2ms, cleanup 17ms}, Mem:{used 810MB, heap 1GB, max 31GB})

2019-01-26 06:50:02,924 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 812MB, heap 1GB, max 31GB})

2019-01-26 06:50:12,923 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 813MB, heap 1GB, max 31GB})

2019-01-26 06:50:22,924 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 815MB, heap 1GB, max 31GB})

2019-01-26 06:50:25,999 [CoreLib-TimeoutManager] INFO [SYSTEM MONITOR] {"os":{"memory":{"physical":{"free":82332979200,"total":128920035328},"swap_space":{"free":0,"total":0}},"cpu":{"percent":0},"load_averages":[0.14, 1.44, 2.34]},"process":{"file_descriptor":{"open":464,"max":65535},"cpu":{"percent":0,"total":316070},"virtual_memory":{"total":44742717440}},"jvm":{"memory":{"heap":{"used":856040960,"committed":1948188672,"max":34246361088,"percent":2},"non_heap":{"used":136556104,"committed":144662528}},"pools":{"direct":{"count":99,"used":541081601,"capacity":541081600},"mapped":{"count":0,"used":0,"capacity":0}},"gc":{"young":{"count":88,"time":1038},"old":{"count":2,"time":157}},"threads":{"count":104,"peak":112},"classes":{"loaded":13391,"total_loaded":13525,"unloaded":134},"uptime":2107776},"elasticsearch":null,"timestamp":1548485425999}

2019-01-26 06:50:32,923 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 818MB, heap 1GB, max 31GB})

2019-01-26 06:50:42,924 [IndexUpdater] INFO Processing no docs (Doc:{access 2ms, cleanup 17ms}, Mem:{used 820MB, heap 1GB, max 31GB})

2019-01-26 06:50:52,923 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 821MB, heap 1GB, max 31GB})

2019-01-26 06:51:02,924 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 823MB, heap 1GB, max 31GB})

2019-01-26 06:51:12,925 [IndexUpdater] INFO Processing no docs (Doc:{access 2ms, cleanup 17ms}, Mem:{used 825MB, heap 1GB, max 31GB})

2019-01-26 06:51:22,925 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 827MB, heap 1GB, max 31GB})

2019-01-26 06:51:26,044 [CoreLib-TimeoutManager] INFO [SYSTEM MONITOR] {"os":{"memory":{"physical":{"free":82317217792,"total":128920035328},"swap_space":{"free":0,"total":0}},"cpu":{"percent":0},"load_averages":[0.05, 1.18, 2.2]},"process":{"file_descriptor":{"open":464,"max":65535},"cpu":{"percent":0,"total":316590},"virtual_memory":{"total":44742717440}},"jvm":{"memory":{"heap":{"used":867934096,"committed":1948188672,"max":34246361088,"percent":2},"non_heap":{"used":136557432,"committed":144662528}},"pools":{"direct":{"count":99,"used":541081601,"capacity":541081600},"mapped":{"count":0,"used":0,"capacity":0}},"gc":{"young":{"count":88,"time":1038},"old":{"count":2,"time":157}},"threads":{"count":104,"peak":112},"classes":{"loaded":13391,"total_loaded":13525,"unloaded":134},"uptime":2167821},"elasticsearch":null,"timestamp":1548485486044}

2019-01-26 06:51:32,924 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 829MB, heap 1GB, max 31GB})

2019-01-26 06:51:42,924 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 831MB, heap 1GB, max 31GB})

2019-01-26 06:51:52,925 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 833MB, heap 1GB, max 31GB})

2019-01-26 06:52:02,924 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 834MB, heap 1GB, max 31GB})

2019-01-26 06:52:12,925 [IndexUpdater] INFO Processing no docs (Doc:{access 2ms, cleanup 17ms}, Mem:{used 836MB, heap 1GB, max 31GB})

2019-01-26 06:52:22,924 [IndexUpdater] INFO Processing no docs (Doc:{access 1ms, cleanup 17ms}, Mem:{used 838MB, heap 1GB, max 31GB})

(from github.com/abolotnov)

the crawler process seems to be there but isn’t doing anything:

sudo jps -v

43781 FessBoot -Xms512m -Xmx512m -Djava.awt.headless=true -Djna.nosys=true -Djdk.io.permissionsUseCanonicalPath=true -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Dlog4j.skipJansi=true -XX:+HeapDumpOnOutOfMemoryError -XX:+DisableExplicitGC -Dfile.encoding=UTF-8 -Dgroovy.use.classvalue=true -Dfess.home=/usr/share/fess -Dfess.context.path=/ -Dfess.port=8080 -Dfess.webapp.path=/usr/share/fess/app -Dfess.temp.path=/var/tmp/fess -Dfess.log.name=fess -Dfess.log.path=/var/log/fess -Dfess.log.level=warn -Dlasta.env=web -Dtomcat.config.path=tomcat_config.properties -Dfess.conf.path=/etc/fess -Dfess.var.path=/var/lib/fess -Dfess.es.http_address=http://localhost:9200 -Dfess.es.transport_addresses=localhost:9300 -Dfess.dictionary.path=/var/lib/elasticsearch/config/ -Dfess -Dfess.foreground=yes -Dfess.es.dir=/usr/share/elas

60166 Jps -Dapplication.home=/usr/lib/jvm/java-8-openjdk-amd64 -Xms8m

49863 Crawler -Dfess.es.transport_addresses=localhost:9300 -Dfess.es.cluster_name=elasticsearch -Dlasta.env=crawler -Dfess.conf.path=/etc/fess -Dfess.crawler.process=true -Dfess.log.path=/var/log/fess -Dfess.log.name=fess-crawler -Dfess.log.level=info -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -Djdk.io.permissionsUseCanonicalPath=true -Xmx32G -XX:MaxMetaspaceSize=512m -XX:CompressedClassSpaceSize=128m -XX:-UseGCOverheadLimit -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -XX:+UseTLAB -XX:+DisableExplicitGC -XX:+HeapDumpOnOutOfMemoryError -XX:-OmitStackTraceInFastThrow -Djcifs.smb.client.responseTimeout=30000 -Djcifs.smb.client.soTimeout=35000 -Djcifs.smb.client.connTimeout=60000 -Djcifs.smb.client.sessionTimeout=60000 -Dgroovy.use.classvalue=true -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Dlog4j.skipJansi=true -Dsun.java2d.cmm=sun.j

(from github.com/marevol)

no docs means indexing queue is empty.

(from github.com/abolotnov)

yeah but I had 5K web crawlers with one site each, and it only collected documents (1 or more) for 125. Something is not working out for me.

(from github.com/marevol)

If you can provide steps to reproduce it in my environment, I’ll check it.

(from github.com/abolotnov)

Well, I configure FESS per instructions, then create 5K Web Crawlers with the following config via admin api:

{

"name": "AMZN",

"urls": "http://www.amazon.com/

https://www.amazon.com/",

"included_urls": "http://www.amazon.com/.*

https://www.amazon.com/.*",

"excluded_urls": ".*\\.gif .*\\.jpg .*\\.jpeg .*\\.jpe .*\\.pcx .*\\.png .*\\.tiff .*\\.bmp .*\\.ics .*\\.msg .*\\.css .*\\.js",

"user_agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.67 Safari/537.36",

"num_of_thread": 6,

"interval_time": 10,

"sort_order": 1,

"boost": 1.0,

"available": "True",

"permissions": "{role}guest",

"depth": 5,

"max_access_count": 32

}

The only different fields are name, URL, included_url

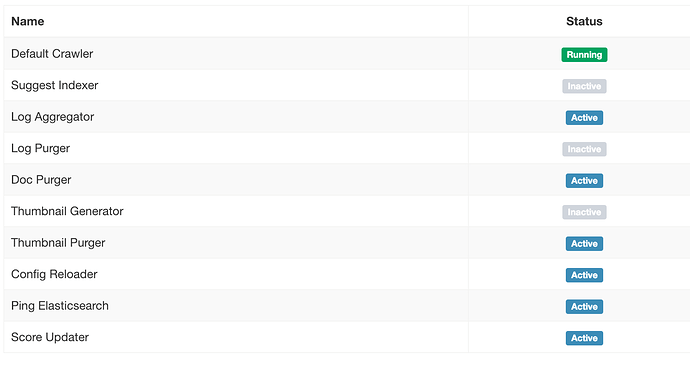

I disable the thumb generator job and start the default crawler. after some time, the default crawler just stops and only leaves documents for about 100 config_ids or so.

I can get you the list of sites or maybe dump the config_web index - let me know how I can help to reproduce it.

(from github.com/abolotnov)

I can generate a large json with all the admin api objects for creating the web crawlers if that helps.

(from github.com/marevol)

Could you provide actual steps to do that?

ex.

- …

- …

- …

…

Commands you executed or like are better.

(from github.com/abolotnov)

Sorry, maybe I don’t understand, but this is what I do:

- Install/configure Elasticsearch and FESS on a AWS instance

- generate access token in FESS

- Use a python script to generate admin api calls to generate web crawlers (around 5,000) - I have Jupyter notebook that does this - I have no problem sharing the notebook but it needs a database and orm backend, let me know if you want the list of sites I use or maybe a json with all the admin api json objects I

PUTto the api to create the web crawlers

3a. After the crawlers are created, I go to FESS admin UI to check that they exist in there (see screenshot below how it looks in UI) - Manually go to System > Scheduling and start the Default Crawling job from there.

Does this make sense?

(from github.com/abolotnov)

I think I have little more info. I tried again on a clean box. The symptoms are very similar: Default Crawler says it’s running on the Scheduling page, but in fact it’s not crawling anything after about 100th site or so.

mkdir tmp && cd tmp

wget https://github.com/codelibs/fess/releases/download/fess-12.4.3/fess-12.4.3.deb

wget https://artifacts.elastic.co/downloads/kibana/kibana-6.5.4-amd64.deb

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.5.4.deb

sudo apt-get update

sudo apt-get install openjdk-8-jre

sudo apt install ./elasticsearch-6.5.4.deb

sudo apt-get install ./kibana-6.5.4-amd64.deb

sudo apt install ./fess-12.4.3.deb

sudo nano /etc/elasticsearch/elasticsearch.yml

^ added configsync.config_path: /var/lib/elasticsearch/config

sudo nano /etc/elasticsearch/jvm.options

^ added memory

sudo nano /etc/kibana/kibana.yml

^ added nonloopback to access externally

sudo /usr/share/elasticsearch/bin/elasticsearch-plugin install org.codelibs:elasticsearch-analysis-fess:6.5.0

/usr/share/elasticsearch/bin/elasticsearch-plugin install org.codelibs:elasticsearch-analysis-extension:6.5.1

sudo /usr/share/elasticsearch/bin/elasticsearch-plugin install org.codelibs:elasticsearch-analysis-extension:6.5.1

sudo /usr/share/elasticsearch/bin/elasticsearch-plugin install org.codelibs:elasticsearch-configsync:6.5.0

sudo /usr/share/elasticsearch/bin/elasticsearch-plugin install org.codelibs:elasticsearch-dataformat:6.5.0

sudo /usr/share/elasticsearch/bin/elasticsearch-plugin install org.codelibs:elasticsearch-langfield:6.5.0

sudo /usr/share/elasticsearch/bin/elasticsearch-plugin install org.codelibs:elasticsearch-minhash:6.5.0

sudo /usr/share/elasticsearch/bin/elasticsearch-plugin install org.codelibs:elasticsearch-learning-to-rank:6.5.0

sudo service elasticsearch start

sudo service kibana start

sudo service fess start

sudo tail -f /var/log/fess/fess-crawler.log

Next steps are:

- Go to

Access tokenand create one:

{

"_index": ".fess_config.access_token",

"_type": "access_token",

"_id": "S4iBlWgBnlDwesKS-gLp",

"_version": 1,

"_score": 1,

"_source": {

"updatedTime": 1548696550112,

"updatedBy": "admin",

"createdBy": "admin",

"permissions": [

"Radmin-api"

],

"name": "Bot",

"createdTime": 1548696550112,

"token": "klbmvQ4UJNXspPE1pOMT1mUqQPNGGk1jgNEaX#mO7RGkVz1EkIGL8sk8iZk1"

}

}

Now, having the access token I create a lot of web crawlers, they look like this in elastic:

{

"_index": ".fess_config.web_config",

"_type": "web_config",

"_id": "UYiDlWgBnlDwesKS2gLx",

"_version": 1,

"_score": 1,

"_source": {

"updatedTime": 1548696673008,

"includedUrls": "http://www.kroger.com/.* \n https://www.kroger.com/.*",

"virtualHosts": [],

"updatedBy": "guest",

"excludedUrls": ".*\\.gif .*\\.jpg .*\\.jpeg .*\\.jpe .*\\.pcx .*\\.png .*\\.tiff .*\\.bmp .*\\.ics .*\\.msg .*\\.css .*\\.js",

"available": true,

"maxAccessCount": 24,

"userAgent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.67 Safari/537.36",

"numOfThread": 8,

"urls": "http://www.kroger.com/ \n https://www.kroger.com/",

"depth": 5,

"createdBy": "guest",

"permissions": [

"Rguest"

],

"sortOrder": 1,

"name": "KR",

"createdTime": 1548696673008,

"boost": 1,

"intervalTime": 10

}

}

And then I change param Remove Documents Before to 100 to avoid having FESS delete documents from index. I also disable thumb generation jobs and what not and start the Default Crawler.

The crawler is reporting active but for a long time now, it hasn’t crawled a single page. I have 1000 crawers in the system but it had only crawled about 100 and stopped crawling extra pages.

I don’t see anything in logs that reports in problem. let me know if you want me to look up elsewhere.